In this guide I go over how to use Vagrant with AWS and in the process have an automated way to install Puppet Enterprise.

I am separating data and code by having a generic Vagrantfile with the code and have a servers.yaml file with all the data that will change from user to user.

For installing the Puppet Enterprise server I am including the automated provisioning script I am using with Vagrant and using AWS Tags to set the hostname of the launched server.

Pre-requisites:

- Vagrant

- vagrant-aws plug-in:

$ vagrant plugin install vagrant-aws

While you can add your AWS credentials to the Vagrantfile, it is not recommended. A better way is to have the AWS CLI tools installed and configured

$ aws configure AWS Access Key ID [****************XYYY]: XXXXXXXXYYY AWS Secret Access Key [****************ZOOO]:ZZZZZZZOOO Default region name [us-east-1]:us-east-1 Default output format [None]: json

TL;DR To get started right away you can download the project from github vagrant-aws-puppetserver, otherwise follow the guide below.

Create Vagrantfile

The below Vagrantfile utilizes a yaml file (servers.yaml) to provide the data, it allows you to control data using the yaml file and not have to modify the Vagrantfile code – separating code and data.

// Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

# Specify minimum Vagrant version and Vagrant API version

Vagrant.require_version ">= 1.6.0"

VAGRANTFILE_API_VERSION = "2"

# Require YAML module

require 'yaml'

# Read YAML file with box details

servers = YAML.load_file('servers.yaml')

Vagrant.configure(VAGRANTFILE_API_VERSION) do |config|

# Iterate through entries in YAML file

servers.each do |server|

config.vm.define server['name'] do |srv|

srv.vm.box = server['box']

srv.vm.box_url = server['box_url']

srv.vm.provider :aws do |aws, override|

### Dont do these here, better to use awscli with a profile

#aws.access_key_id = "YOUR KEY"

#aws.secret_access_key = "YOUR SECRET KEY"

#aws.session_token = "SESSION TOKEN"

aws.region = server["aws_region"] if server["aws_region"]

aws.keypair_name = server["aws_keypair_name"] if server["aws_keypair_name"]

aws.subnet_id = server["aws_subnet_id"] if server["aws_subnet_id"]

aws.associate_public_ip = server["aws_associate_public_ip"] if server["aws_associate_public_ip"]

aws.security_groups = server["aws_security_groups"] if server["aws_security_groups"]

aws.iam_instance_profile_name = server['aws_iam_role'] if server['aws_iam_role']

aws.ami = server["aws_ami"] if server["aws_ami"]

aws.instance_type = server["aws_instance_type"] if server["aws_instance_type"]

aws.tags = server["aws_tags"] if server["aws_tags"]

aws.user_data = server["aws_user_data"] if server["aws_user_data"]

override.ssh.username = server["aws_ssh_username"] if server["aws_ssh_username"]

override.ssh.private_key_path = server["aws_ssh_private_key_path"] if server["aws_ssh_private_key_path"]

config.vm.synced_folder ".", "/vagrant", type: "rsync"

config.vm.provision :shell, path: server["provision"] if server["provision"]

end

end

end

end

Create servers.yaml

This file contains the information that will be used by the Vagrantfile, this includes

AWS region: Which region will this EC2 server run

AWS keypair: Key used to connect to your launched EC2 instance

AWS subnet id: Where will this EC2 instance sit in the AWS network

AWS associate public ip: Do you need a public IP? true or false

AWS security group: What AWS security group should be associated, should allow Puppetserver needed ports and whatever else you need (ssh, etc)

AWS ami: Which AMI will you be using I am using a CentOS7

AWS instance type: Puppetserver needs enough CPU/RAM, during my testing m3.xlarge was appropriate

AWS SSH username: The EC2 instance user (depends on which AMI you choose), the CentOS AMI expects ec2-user

AWS SSH private key path: The local path to the SSH key pair

AWS User Data: I am adding user data which will execute a bash script that allows Vagrant to interact with the launched EC2 instance

AWS Tags: This is not required for Vagrant and AWS/EC2, but in my provision script I am using the AWS Name Tag to be the system’s hostname, the other 2 tags are there for demonstration purposes

provision: This is a provisioning script that will be run on the EC2 instance – this is the script that install the Puppet Enterprise server

AWS IAM Role: You don’t need to add a role when working with Vagrant and AWS/EC2, but I am using a specific IAM role to allow the launched EC2 instance to be able to get information about its AWS Tags, so it is important that you provide it with a Role that allows DescribeTags, see below IAM policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "XXXXXXX",

"Action": [

"ec2:DescribeTags"

],

"Effect": "Allow",

"Resource": "*"

}

]

}

Now use your data in the servers.yaml file

// servers.yaml

---

- name: puppet4

box: dummy

box_url: https://github.com/mitchellh/vagrant-aws/raw/master/dummy.box

aws_region: "us-east-1"

aws_keypair_name: "john-key"

aws_subnet_id: "subnet-0001"

aws_associate_public_ip: false

aws_security_groups: ['sg-0001']

aws_ami: "ami-0001"

aws_instance_type: m3.xlarge

aws_ssh_username: "ec2-user"

aws_iam_role: "iam-able-to-describe-tags"

aws_ssh_private_key_path: "/Users/john/.ssh/john-key.pem"

aws_user_data: "#!/bin/bash\nsed -i -e 's/^Defaults.*requiretty/# Defaults requiretty/g' /etc/sudoers"

aws_tags:

Name: 'puppet4'

tier: 'stage'

application: 'puppetserver'

update: false

provision: install_puppetserver.sh

At this point you can spin up EC2 instances using the above Vagrantfile and servers.yaml file. If you add provision: install_puppetserver.sh to the servers.yaml file as I did and add the below script you will have a Puppet Enterprise server ready to go.

// install_puppetserver.sh

#!/bin/bash -xe

# Ensure pip is installed

if ! which pip; then

curl "https://bootstrap.pypa.io/get-pip.py" -o "get-pip.py"

python get-pip.py

fi

# Upgrade pip

/bin/pip install --upgrade pip

# Upgrade awscli tools (They are installed in the AMI)

/bin/pip install --upgrade awscli

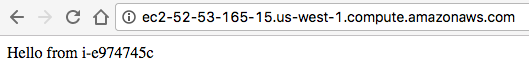

# Get hostname from AWS Name Tag (requires the EC2 instance to have an IAM role that allows DescribeTags)

AWS_INSTANCE_ID=$(curl -s http://169.254.169.254/latest/meta-data/instance-id)

AWS_REGION=$(curl -s 169.254.169.254/latest/dynamic/instance-identity/document | grep region | cut -d\" -f4)

AWSHOSTNAME=$(aws ec2 describe-tags --region ${AWS_REGION} --filters "Name=resource-id,Values=${AWS_INSTANCE_ID}" --query "Tags[?Key=='Name'].Value[] | [0]" | cut -d\" -f2)

# Set hostname (use the AWS Name Tag)

hostnamectl set-hostname ${AWSHOSTNAME}.cpg.org

# Update system and install wget, git

yum update -y

yum install wget git -y

# Set puppet.cpg.org as hostname in hosts file

echo "$(curl -s http://169.254.169.254/latest/meta-data/local-ipv4) puppet puppet.cpg.org" >> /etc/hosts

# Download puppet

wget "https://pm.puppetlabs.com/cgi-bin/download.cgi?dist=el&rel=7&arch=x86_64&ver=2016.4.0" -O puppet.2016.4.0.tar.gz

tar -xvzf puppet.2016.4.0.tar.gz

cd puppet-enterprise*

# Create pe.conf file

touch pe.conf

echo '{' >> pe.conf

echo '"console_admin_password": "puppet"' >> pe.conf

echo '"puppet_enterprise::puppet_master_host": "%{::trusted.certname}"' >> pe.conf

echo '}' >> pe.conf

echo "Install Puppetserver"

./puppet-enterprise-installer -c pe.conf

echo "Adding * to autosign.conf"

cat >> /etc/puppetlabs/puppet/autosign.conf <<'AUTOSIGN'

*

AUTOSIGN

# Run puppet agent

/usr/local/bin/puppet agent -t